This is a brief update to a previous post on how to measure conspiracy beliefs. My point in the previous post was that a study published in Psychological Medicine used weird measures to capture conspiracy beliefs.

In a letter to the editor, Sally McManus, Joanna D’Ardenne and Simon Wessely note that the response options provided in the paper are problematic: “When framing response options in attitudinal research, a balance of agree and disagree response options is standard practice, e.g. strongly and slightly disagree options, one in the middle, and two in agreement. Some respondents avoid the ‘extreme’ responses either end of a scale. But here, there was just one option for ‘do not agree’, and four for agreement (agree a little, agree moderately, agree a lot, agree completely).”

The authors of the study replied to the letter and, in brief, they double down on their conclusions: “Just because the results are surprising to some – but certainly not to many others – does not make them inaccurate. We need further work on the topic and there is clearly enough from the survey estimates to warrant that.”

Interestingly, we now have further work on the topic. In a new study, Agreeing to disagree: Reports of the popularity of Covid-19 conspiracy theories are greatly exaggerated, Robbie M. Sutton and Karen M. Douglas (both from the University of Kent) show that the measures in the study mentioned above (and in my previous post) are indeed problematic.

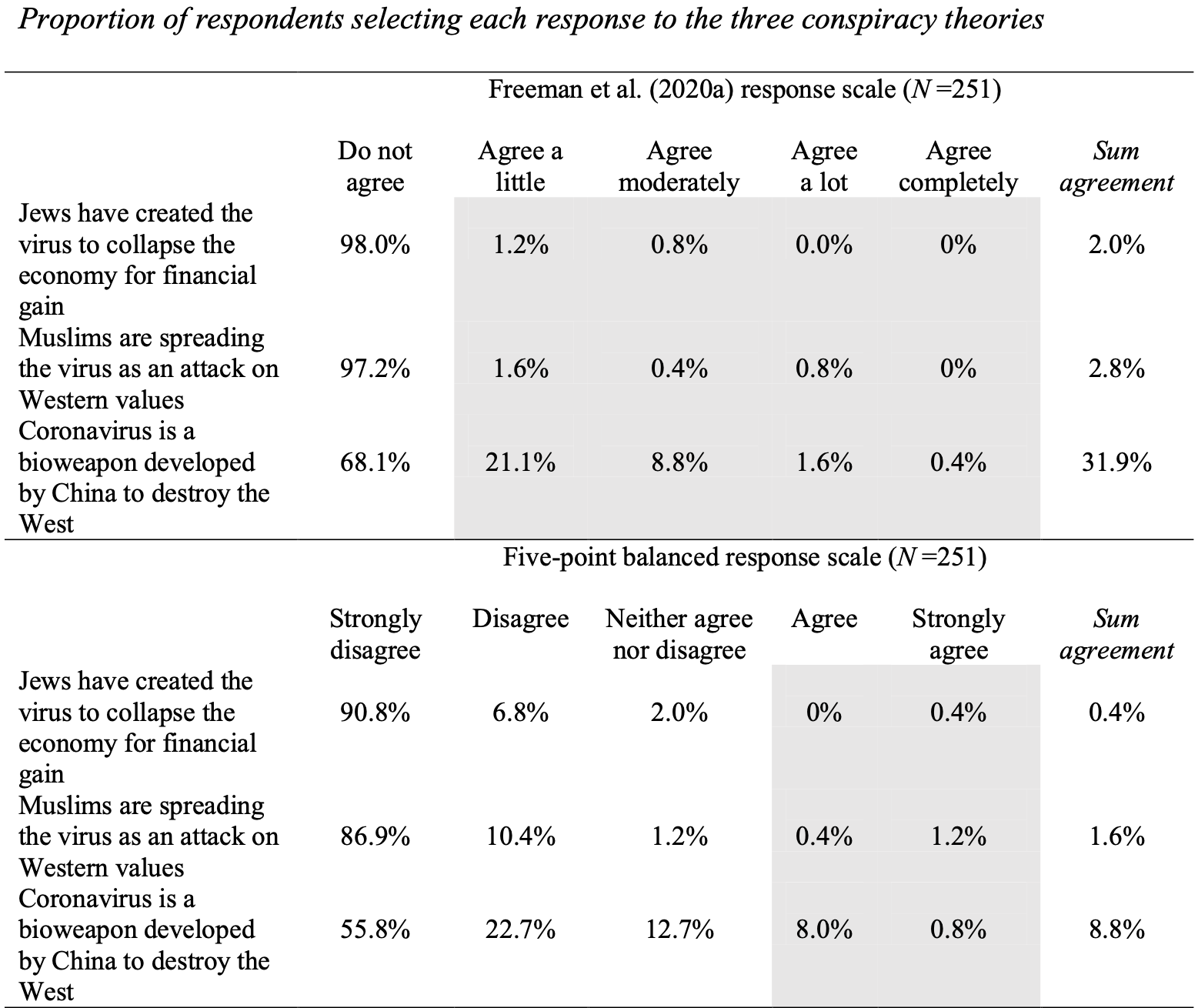

The figure below shows the key result, i.e. the sum agreement with specific conspiracy beliefs using the different scales.

The shaded areas are the ‘agree’ options in the scale (with more agree options provided in the original study). What we can see is that the sum agreement is substantially greater when using the problematic scale. For a conspiracy belief such as ‘Coronavirus is a bioweapon developed by China to destroy the West’, the ‘Strongly disagree-Strongly agree’ scale results in a sum agreement of 8.8%, whereas the scale used by the authors of the original study resulted in a sum agreement of 31.9%.

In sum, this is an interesting case of how (not) to measure conspiracy beliefs and how researchers from the University of Oxford themselves can contribute to the spread of such conspiracy beliefs. Or, as Robbie M. Sutton and Karen M. Douglas conclude: “As happens often (Lee, Sutton, & Hartley, 2016), the striking descriptive statistics of Freeman et al.’s (2020a) study were highlighted in a press release that stripped them of nuance and caveats, and led to some sensational and misleading media reporting that may have complicated the very problems that we all, as researchers, are trying to help solve.”