The liar paradox is the logical paradox in the statement “I am lying”. If you are telling the truth about lying, are you then indeed lying? I have been thinking about this paradox and how it might also be relevant for survey research with implications for our understanding of measurement error and the interpretation of findings in scientific studies.

To illustrate the paradox, let us look at data from the American National Election Studies 2020 Exploratory Testing Survey. In this survey, you will find the question “We sometimes find people don’t always take surveys seriously, instead providing funny or insincere answers. How often did you give a serious response to the questions on this survey?”

In the survey, 54 respondents answer that they never give a serious response to the questions in the survey. 82% of the respondents in the survey answer that they always give serious responses to the questions in the survey. But if you never give a serious response, should we believe you if you say you never give a serious response?

Why should we care about this? It demonstrates one of the limitations of self-reported data. People do not always tell the truth, and we have – in most cases – no easy way of knowing whether a respondent is telling the truth or not. Accordingly, there are limitations to what we can understand simply by asking people. I have previously published research on the limitations of self-reported survey data (see, for example, here), but we should pay a lot more attention to the limitations of self-reported data, and ideally replicate any findings with other-reported data and behavioural data.

Why would people, for example, be honest about how often they lie in a survey? There is a strong social desirability bias, when asked, to admit to telling the truth. We have different scales to try to capture people’s ability to not cave in to socially desirable responses, such as the Marlowe-Crowne Social Desirability Scale, the Edwards Social Desirability Scale, and the Balanced Inventory of Desirable Responding, but I do not find these self-reported scales useful in this context.

If you always lie in surveys, wouldn’t you say that you never lie? And can we believe that the people who say they lie are the people that actually lie? In other words, with enough data, would it even make sense to examine the correlates of not telling the truth in surveys? I don’t think so.

For that reason, it is useful to take the liar paradox into account when we consider the representativeness of our data (or rather lack hereof). There are definitely people out there that would not provide reliable answers to surveys when asked. The good news is that those people are also less likely to participate in surveys, or in other ways fail attention checks and be excluded from such surveys.

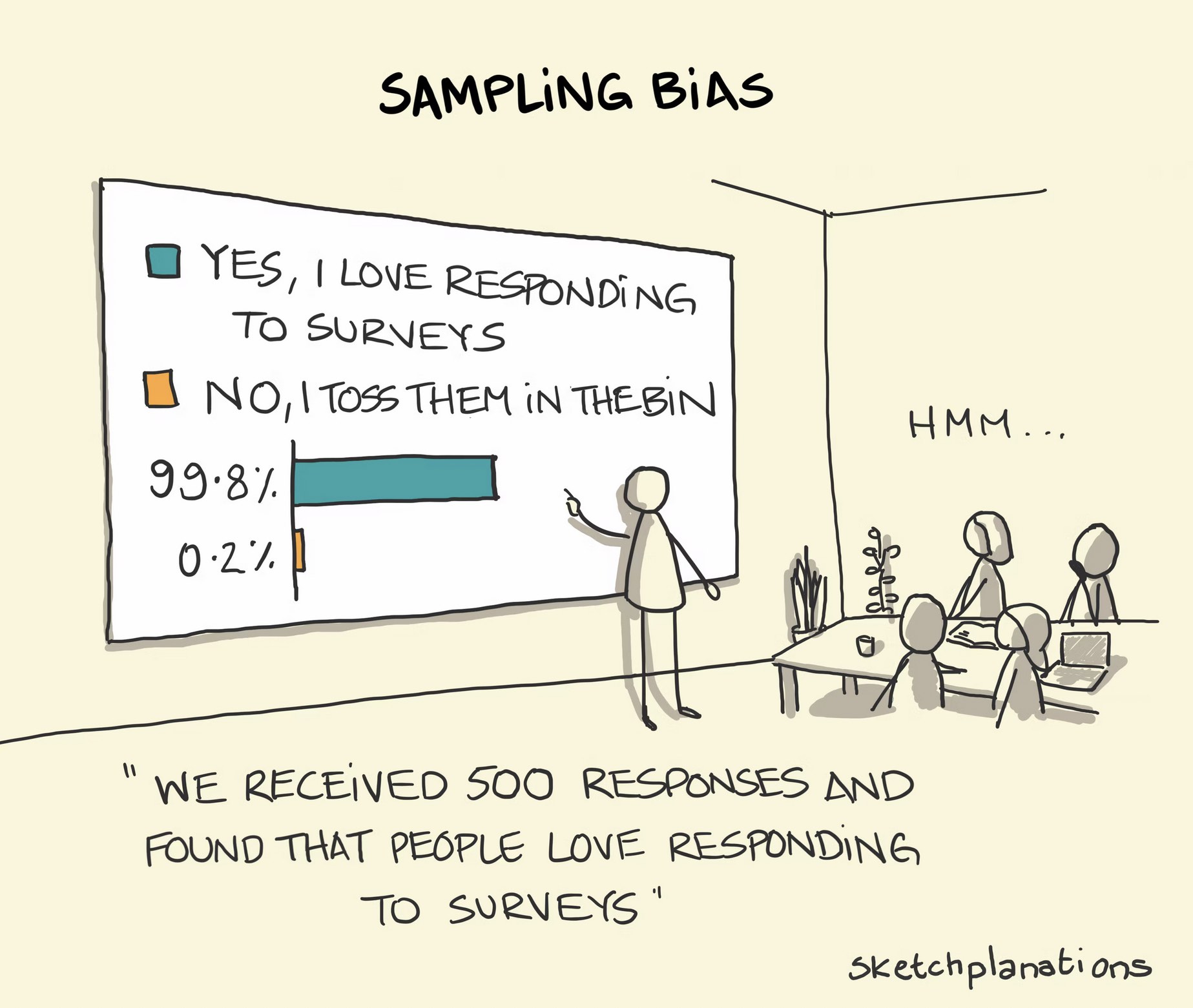

People conducting surveys do whatever they can to only include respondents who provide serious answers. Here is a relevant cartoon from Sketchplanations showing the challenge of using self-reported survey data to answer specific questions:

Specifically, if responses to the questions we are asking are correlated with the propensity to participate in the survey, we will get biased data (see also the funny comic by Randall Munroe on selection bias). The people who are more likely to lie in surveys are most likely also less likely to participate in surveys. For that reason, even if people were completely honest about how often they lie to the specific question about lying, it would not necessarily provide a representative estimate on the extent to which people in general would lie in a survey.

It also forces us to consider measurement error more generally. For any question you ask, there will always be a minority of respondents picking a certain answer, even if the option makes no sense. There are multiple reasons for this: people might select the wrong answer by mistake, misunderstand a question, lie, pick answers at random, etc.

As a rule of thumb, expect that for any response category, 4% of the respondents will pick even the most crazy category. Scott Alexander calls this the Lizardman’s Constant, because four percent of Americans, in a survey on conspiracy theories, believed lizardmen are running the Earth.

I thought about this the other day when I saw a new study using a representative survey to examine Americans’ support for political violence. This study concludes that, among all respondents, 4% thought it at least somewhat likely that “I will shoot someone with a gun.” When I see a number like that derived from a survey, I am not convinced that 4% of Americans find it likely that they will shoot someone with a gun.

This is not to say that these 4% are definitely lying, or that the true proportion in the population is significantly different from 4%, but that measurement error is something to take into account, especially when we are looking at small numbers and have reasons to believe that a (small) group of respondents in a survey might lie. And when such respondents are not telling the truth, they do not necessarily pick answers at random. Alas, we are dealing with non-random errors.

You can find a lot of surveys where the numbers are so small that, once you consider Lizardman’s Constant, it is not relevant whether the number is 2% or 4%. For example, two percent of people say they prefer the middle seat in the plane if they are travelling alone. And five percent of people say they feel very positive towards seagulls.

I have seen researchers trying to develop self-reported measures and scales tapping into the extent to which people are basically trolling. However, survey data like this should make us question the psychometric properties of such scales when relying on self-reported data. I don’t see any reason here to go into specific examples, but if a scale is trying to capture the extent to which a respondent is a troll, why believe the respondent is telling the truth when answering the questions related to the scale?

The next time you see a very small number in a survey, do take it with a grain of salt – especially if there is a reason to believe that not all respondents in the survey will tell the truth.