I recently gave a keynote speech at the conference Sondagens: da Conceção ao Impacto. Luckily, I could give the talk in English (and not Portuguese). Alas, it had to be online. The title of my talk was ‘Opinion polls are bad and we need more of them’.

As the English translation of the conference title is ‘Surveys: From Conception to Impact’, I decided to focus on how opinion polls are conducted and reported, i.e., the process of how opinion polls develop from numbers to news. In doing this, I presented the core findings from our book, Reporting Public Opinion: How the Media Turns Boring Polls into Biased News, on how media outlets select and report opinion polls.

I deliberately picked a title for my talk that would spark discussion rather than trying to sell one key idea. My ambition was to facilitate a good discussion throughout the rest of the event. I have never given a keynote speech before (and I rarely give talks anymore), but my sense is that it worked okay.

I aimed to convey various points in my talk. I will not go through all of them here but simply provide a few examples of the points I believe should get more attention (and that I have not covered sufficiently in previous posts).

First, opinion polls cannot speak for themselves. A lot of the challenges we face when talking about opinion polls are not only about opinion polls, but rather how they are (mis)treated by pundits, journalists, politicians, ordinary people, statisticians, etc. For that reason, it is important to work with a distinction between how opinion polls are conducted and how opinion polls are reported. We can easily conflate the two and that makes it difficult to fully understand the limitations and challenges we are dealing with.

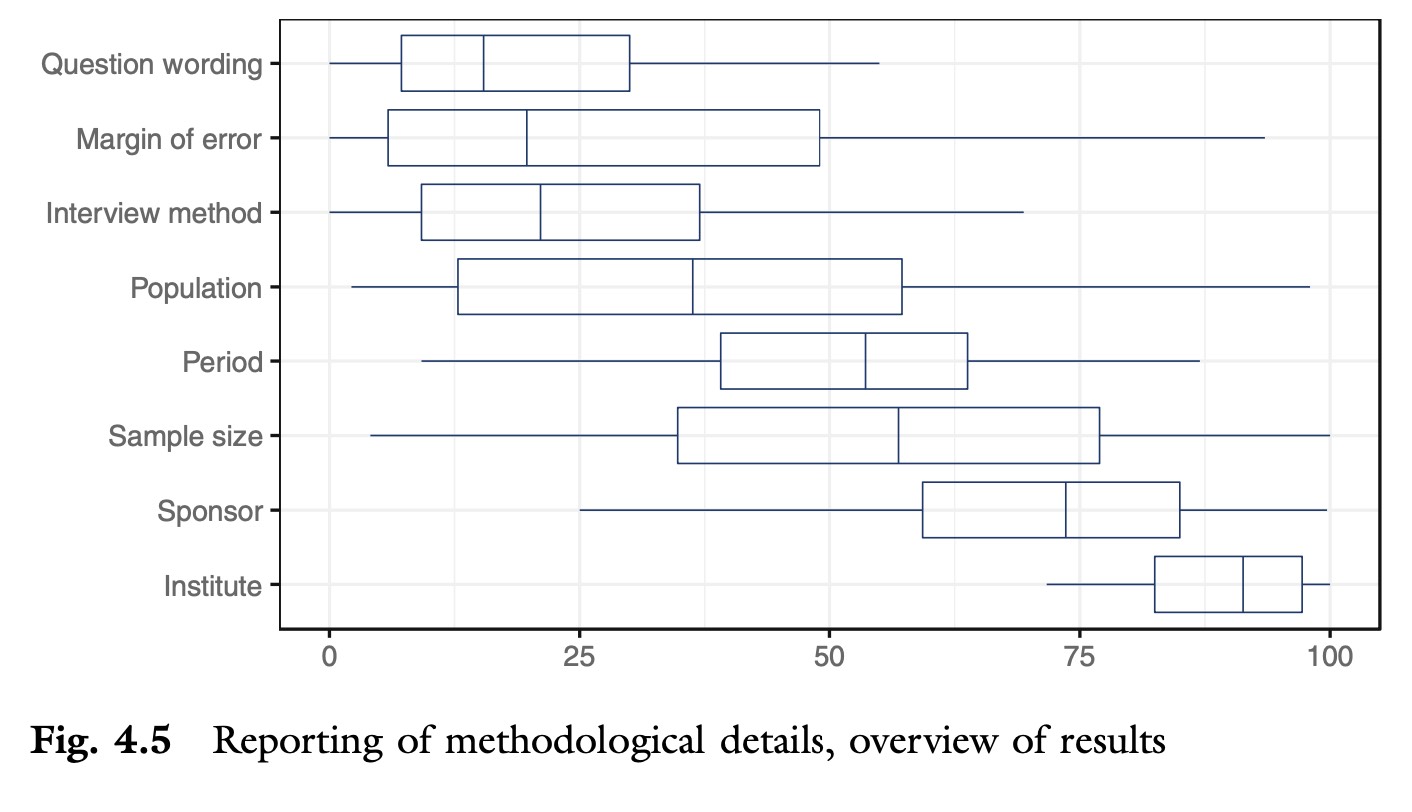

Second, the quality of the coverage of opinion polls can, on average, be improved. Ten years ago, following the 2011 Danish general election, Simon Straubinger and I conducted an analysis of the quality of the coverage of opinion polls. We found significant differences in the extent to which media outlets in Denmark report methodological details when covering opinion polls. In the book, Zoltán Fazekas and I reviewed 30 published studies analysing the reporting of methodological details in the coverage of opinion polls (Table 4.6 in the book provides an overview of all studies with information on the country of the study, the time period, number of articles, the media, as well as the campaign context). For each of the studies we collected information on nine features: whether they reported a sponsor (media outlet commissioning the poll), sample size, question wording, population, period, statistical uncertainty/margin of error, interview method (e.g. phone interviews) and institute (polling firm). If a study provided such information, we coded how many of the articles in the analysis reported this information (as a percentage). Of course, there are many other features that could be included, but we found these nine to be most important (see Table 4.1 in the book for an overview of different types of methodololgical information and their inclusion in different methodological standards). Figure 4.5 in the book provides an overview of how often methodological details, on average, are included in the coverage of opinion polls based upon the evidence presented in the 30 studies:

We see that the institute conducting the poll is mentioned in a majority of all articles on opinion polls. This is a good sign but it should really be mentioned in 100% of the coverage. If you cannot see who conducted an opinion poll, you should not take the poll seriously. Interestingly, and sadly, while there is a lot of variation, the margin of error is the key methodological detail that is rarely mentioned. My sense is that media outlets are becoming more aware of the importance of reporting the margin of error, but most of the analyses in the literature suggest that the reporting of methodological details in most cases omit information on the margin of error. The question wording is the methodological detail that is included the least in the coverage. This is not necessarily a concern and only emphasises that not all methodological details are of equal importance. For example, as most political polls are about vote intention, it is often not important to know the exact question wording, as the question rarely changes over time.

Third, opinion polls are no worse today than in the past. This is a point I often mention when I talk about opinion polls. Opponents of opinion polls argue that polls are biased. That is, opinion polls are bad at predicting elections (e.g., the 2016 US presidential election) and referendums (e.g., Brexit). However, there is little evidence to support that opinion polls are bad. Of course, there are examples of challenges with opinion polls in specific cases, but overall opinion polls are good at predicting election outcomes. The key study to read here is Jennings and Wlezien (2018). From the abstract: “We find that, contrary to conventional wisdom, the recent performance of polls has not been outside the ordinary.”.

Fourth, we should focus on the cognitive biases than can explain how people engage with opinion polls. I believe there are at least three different, yet interrelated, biases, that can explain, why people are more likely to believe opinion polls often are wrong:

- Confirmation bias. We believe opinion polls are wrong and are more likely to remember the cases where polls are wrong.

- Negativity bias. We remember opinion polls that are wrong and forget the good polls.

- Availability bias. We pay attention to the information that is available to us – without considering what information is not available.

I am sure there are other explanations at play as well, but my guess is that the above explanations can account for a significant proportion of why people are more likely to believe that polls get it more wrong than they actually do.

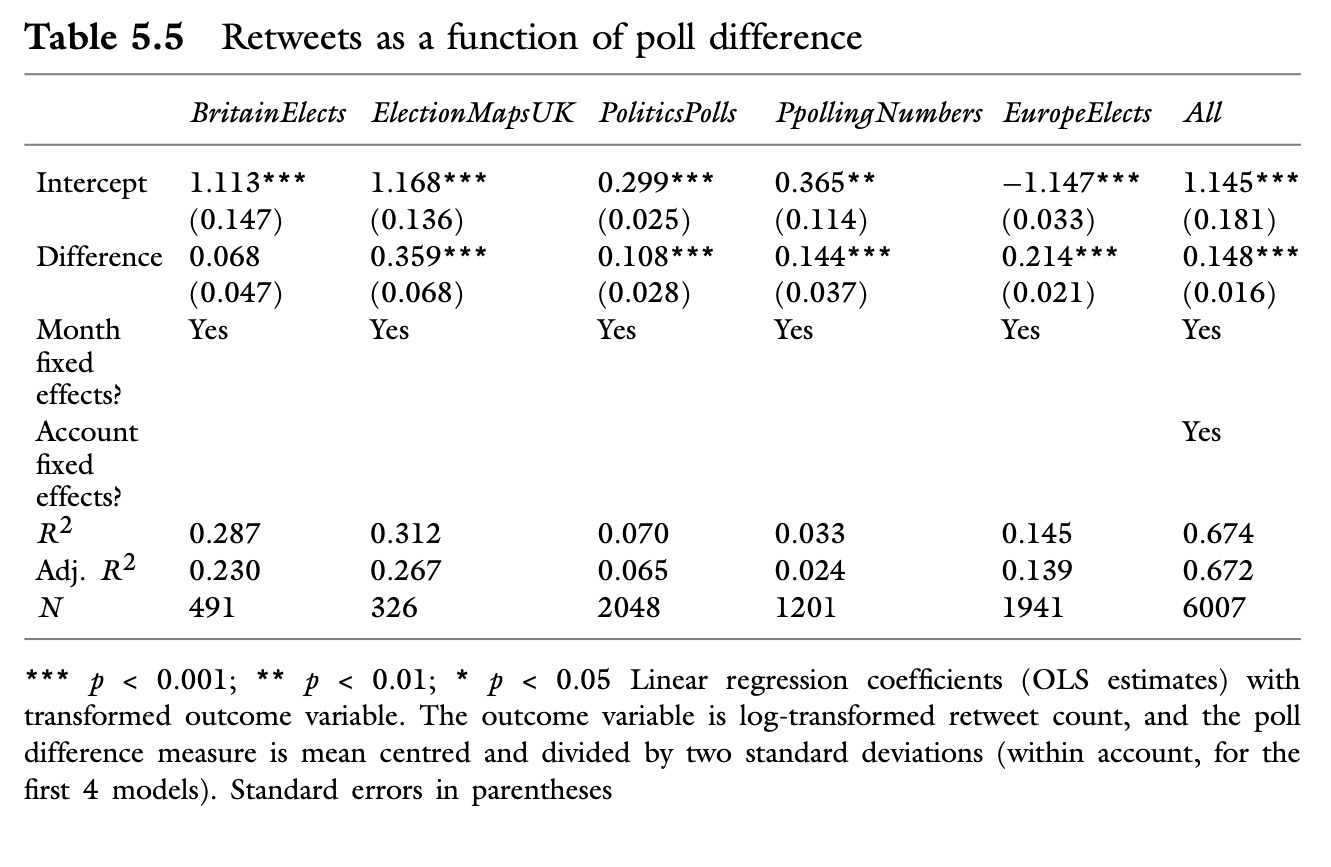

Fifth, we should study opinion polls on social media more than we currently do. Opinion polls are often shared on social media, but do some opinion polls get more attention? The short answer is yes. Some opinion polls get little to no attention whereas other opinion polls go viral. Here is an example of a poll that went viral in 2019: In our book, we collected data on five popular poll aggregation accounts covering the UK (@BritainElects and @ElectionMapsUK) elections across Europe (@EuropeElects) and the United States (@Politics_Polls and @PpollingNumbers). Together, these accounts were, to our knowledge, the biggest and most popular poll aggregation accounts on Twitter with an aggregate following of more than one million users.

In our book, we collected data on five popular poll aggregation accounts covering the UK (@BritainElects and @ElectionMapsUK) elections across Europe (@EuropeElects) and the United States (@Politics_Polls and @PpollingNumbers). Together, these accounts were, to our knowledge, the biggest and most popular poll aggregation accounts on Twitter with an aggregate following of more than one million users.

We collected the most recent tweets from these accounts using Twitter’s API and the rtweet R package. This gave us information on the number of retweets each of these tweets had at the time of the data collection. The most recent tweets were collected on 25 January 2021, and the data on retweets reflects the numbers at that time. For each tweet where we could get information on a change in percentage points, we extracted this change and kept the largest change. In the above tweet, for example, the largest change was 6. In a series of regression models, we regressed the number of retweets on the maximum change in the poll. The results are provided in Table 5.5 in the book.

In brief, the greater the difference, the greater the number of retweets (as indicated by the coefficients next to ‘Difference’ in the regression models). Opinion polls that show little to no differences get less attention – not only in the traditional media, but also on social media.

Sixth, there are three different ways journalists can engange with the margin of error. The good way is to understand uncertainty and make no mistakes in the interpretation of said uncertainty. The bad way is to misunderstand uncertainty and misinterpret changes that are not changes. The ugly way is to understand uncertainty and still make mistakes. Why should we care about whether journalists engage with the margin of error in a bad or ugly way? Beceause the solution might not simply be about informing journalists about the margin of error, if they already are familiar with such concepts and still make mistakes.

Seventh, there are distinct challenges to address. For pollsters, the main challenge is to ensure that opinion polls continue to be representative. In the United States, this especially relates to taking trust-induced non-response seriously. For journalists, the main challenge is to report opinion polls better (and not to not report opinion polls at all).

You can actually find the talk on YouTube (my presentation begins around the 13 minute mark). I have never gotten used to given talks with no immediate feedback (i.e., where you can’t see the audience), but I am sure I will watch the talk at some point in the near future. I also got a few great questions, including what I think politics would look like if we simply got rid of opinion polls. I guess it will be no spoiler alert to say, that I do not think politics would be any better without opinion polls.

There is a lot of stuff I considered to talk about but did not have the time to deal with. For example, the different ways in which opinion polls might shape public opinion polls. I have been thinking a lot about a distinction between direct and indirect bandwagon effects. A direct bandwagon effect is when voters come into direct contact with opinion polls, consume the information and act upon the public support for the parties. If you see a party is ahead, you are more likely to vote for the party. An indirect bandwagon effect is when a person is still affected by opinion polls, but it is not by the direct contact with the polls. Instead, politicians are more likely to follow these polls, and that can affect their level of confidence, the cohesion within a party, respect from other parties/politicians, etc. All of these changes can then, indirectly, shape public opinion.

When preparing my talk, it was also a good opportunity to read a bit more about opinion polls in Portugal. The most relevant papers I could find were Magalhães (2005) and Wright et al. (2014). Another recent study I enjoyed is Pereira (2019) on how political parties respond to opinion polls.