Are low response rates resulting in biased estimates of public support towards the EU in Eurobarometer? That is the argument presented in this story in the Danish newspaper Information. As the journalist behind the story writes on Twitter: “EU’s official public opinion survey – Eurobarometer – systematically overestimates public support for the EU”.

As I described in a previous post (in Danish), I talked to multiple journalists this week where I made the argument that the response rate is informative but not sufficient or even necessary in order to obtain representative samples. In brief, I don’t understand the following recommendation provided in the article: “Experts consulted by Information estimate that the response rate ought to reach 45-50% before a survey is representative.” That’s simply a weird rule of thumb.

That being said, what is the actual evidence presented by the journalist that Eurobarometer systematically overestimates public support for the EU? None. Accordingly, I am not convinced, based on the coverage, that the response rate in Eurobarometer is significantly affecting the extent to which people are positive towards the EU in the Eurobarometer data.

The weird thing is that the journalist is not providing any evidence for the claim but simply assuming that a low response rate is leading to a systematic bias in the responses. Noteworthy, I am not the first one to point out a problem with the coverage. As Patrick Sturgis, Professor at London School of Economics, points out on Twitter, the piece “provides no evidence that Eurobarometer overestimates support for the EU”.

There is no easy way to assess the extent to which this is a problem. The issue is that we (obviously) do not have data on the people that decide not to participate in Eurobarometer. Alternatively, we would have the exact same questions asked in different surveys at the same time in the same countries.

However, it is still relevant to look at the data we got and see whether there is anything that is in line with the criticism raised by Information. In brief, I can’t see anything in the data confirming the criticism raised by the coverage.

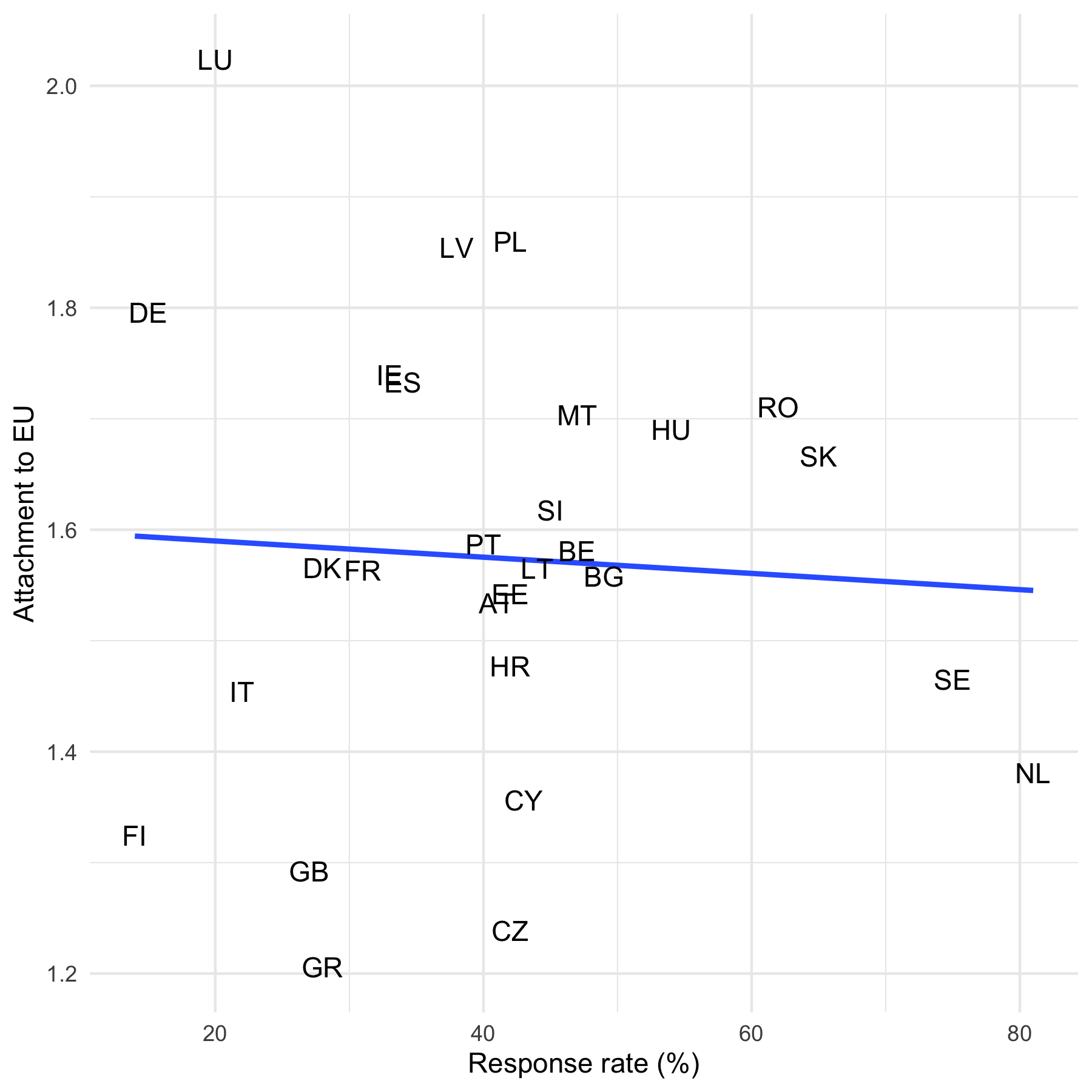

First, when we look at the response rates in Eurobarometer 89.1 and the support towards the EU, I am unable to find any evidence that the samples with lower response rates are more supportive. For example, if we look at the extent to which people feel attached to the European Union, we see no correlation between attachment to the EU and the response rate.

In the figure above, we see that the respondents interviewed in the UK are among those who feel least attached to the EU, but they also have a low response rate (way below the “representative” 45-50%). The counterfactual argument is that if the response rate was greater, a greater proportion of the respondents would feel less attached to the EU. This is possible but I am not convinced that the response rate is the most important metric here to assess how representative the data is.

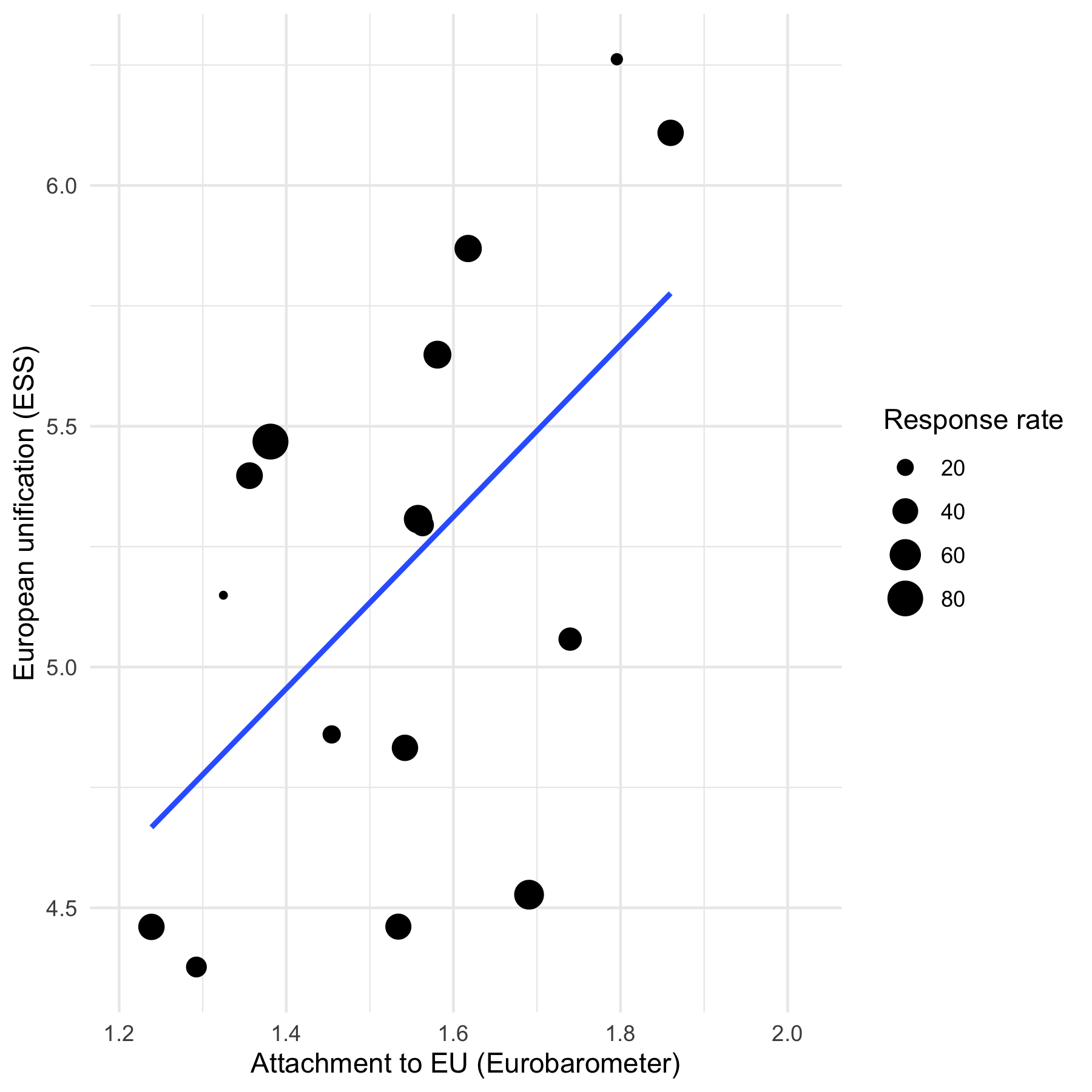

Second, if the low response rate was problematic, we should see that countries with a low response rate in Eurobarometer systematically provide more positive EU estimates when compared to other datasets. In the figure below, I plot the estimates on attachment to EU (Eurobarometer) and the answers from respondents in the 2018 European Social Survey (Round 9) on a question related to preferences for European unification.

The size of each dot is the response rate (with a greater size indicating a greater response rate). We see a strong correlation between the estimates in the two surveys. In countries where more people feel attached to the EU (in Eurobarometer), more people are also more likely to prefer European unification (in the European Social Survey).

More importantly, we do not see that the countries with a low response rate are outliers. There is nothing here suggesting that countries with lower response rates are much more positive towards the EU in Eurobarometer compared to the European Social Survey.

I am not saying that any of this is conclusive evidence that there is no reason for concern, but I simply do not see any significant problems when looking at the data. On the contrary, it would be great if the journalist could provide any evidence for the claim that Eurobarometer “systematically overestimates public support for the EU”.

Why might there not be a significant problem? One reason is, as described by a spokeswoman for the EU Commission here, that “respondents are not told in the beginning of their face-to-face interview that the survey is done for an EU institution”.

Last, there is something odd about a story like this. Why, for example, did the journalist not include any of the potential caveats I mention here? I know that the journalist talked to an expert that did not agree with the frame of the article, but that was not mentioned in the article. How many experts were contacted that did not buy into the premise of the story? For the sake of transparency, it would be great if the journalist could declare his … response rate.